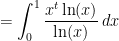

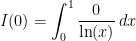

Leibniz’s rule is a powerful tool for solving otherwise impossible integrals. Here is one of these:

Substitution, by parts, partial fractions, try it for yourself, all of these methods will fail to give you an antiderivative. We even had to introduce a special function, named Si(x), just to give it an antiderivative. But our method focuses on solving definite integrals, rather than antiderivatives. “Differentiation under the integral sign”, as we call it, is a direct consequence of Leibniz’s rule, which states

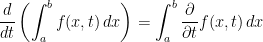

The variable t only serves as a parameter, which hopefully helps us integrate when we take its partial derivative. But this rule basically states that–under certain lenient conditions– one may interchange the derivative operator and the integral. But how does that help us with anything?, you ask. Well here is an example: say you want to calculate this definite integral

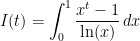

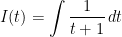

Here again, the usual methods will fail us. And we can’t apply our new rule if we don’t have a parameter t. So let’s introduce a new parameter that, when differentiated, will make it simpler to integrate. Ideally, we would want to get rid of that  in the denominator. What if we set

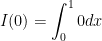

in the denominator. What if we set  in the exponent of our integrand? Than our whole expression would be a function of t, let’s call it

in the exponent of our integrand? Than our whole expression would be a function of t, let’s call it  , that we would later evaluate at 2:

, that we would later evaluate at 2:

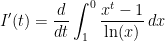

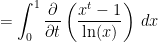

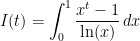

Differentiating with respect to t:

Well this is easier to integrate! The most difficult part is finding the right parameter, and, admittedly, there sometimes won’t be any. But a lot of times, it works nicely just like in our example. We can continue, treating t as a constant in our integration:

Now, remember, this is the derivative of  , not

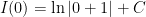

, not  . But that’s not what we want. So to get our original expression, we need to integrate our result with respect to t:

. But that’s not what we want. So to get our original expression, we need to integrate our result with respect to t:

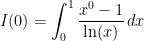

But what is C? Well let’s see if we can find an “initial” condition from our original integrand.

What happens if we set  ? Then the whole integral equals zero, so

? Then the whole integral equals zero, so  :

:

Knowing these conditions, let’s see what our constant is:

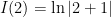

Okay, so we don’t have to worry about the constant since it equals zero. So anyways, we want to evaluate our new expression for  at

at  , because that’s what we substituted t for in the original integrand. We then get:

, because that’s what we substituted t for in the original integrand. We then get:

And there is our exact result! Interestingly, we had to solve a more general problem, to then apply it specifically to our problem. What we did allows us to generalize:

This technique of integration not only allows us to solve some otherwise resisting integrals, but also to generalize to other parameters! Sometimes, we need to actually add a parameter inside the integrand to be able to evaluate it. This technique was actually popularized by Richard Feynman himself, and this is what he said (well wrote) about it in his book Surely, You’re Joking, Mr. Feynman! :

“I had learned to do integrals by various methods shown in a book that my high school physics teacher Mr. Bader had given me. [It] showed how to differentiate parameters under the integral sign – it’s a certain operation. It turns out that’s not taught very much in the universities; they don’t emphasize it. But I caught on how to use that method, and I used that one damn tool again and again. [If] guys at MIT or Princeton had trouble doing a certain integral, [then] I come along and try differentiating under the integral sign, and often it worked. So I got a great reputation for doing integrals, only because my box of tools was different from everybody else’s, and they had tried all their tools on it before giving the problem to me.”

, the harmonic series, diverges. But what about the alternating version of the series? And if it converges, what is its sum? Here is the series:

, we have the alternating harmonic series: