So the other day I was thinking about the complex representation of some trig functions, which are derived from Euler’s Formula, and wanted to share some interesting ways of arriving at the Pythagorean identity.

Before jumping into  , let’s try to understand this equation using simple trig and geometry. And the reason why it’s called the “Pythagorean” identity is because it’s usually derived from Pythagorean’s theorem. How? Let’s see:

, let’s try to understand this equation using simple trig and geometry. And the reason why it’s called the “Pythagorean” identity is because it’s usually derived from Pythagorean’s theorem. How? Let’s see:

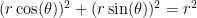

Imagine a unit circle—actually don’t worry about the unit part—centered at the origin and a right triangle inscribed in it. The length of its hypotenuse will be equal to the radius, the x-coordinate will be the length of one its shorter side, and the y-coordinate will be the length of its last side. Now we know from the Pythagorean theorem that

And then we can use some trigonometry to try to express x and y in terms of r, sine and cosine. We’ll let our angle  be the angle that our hypotenuse makes with the x-axis. Let’s write down what we know about

be the angle that our hypotenuse makes with the x-axis. Let’s write down what we know about

Or

We can then substitue these last equations in for x and y in our Pythagorean equation:

Dividing out by r (we can do this because r can never be zero):

And there we go, we find our original Pythagorean identity. But now, let’s prove it in a very different but interesting way: using complex numbers! We can actually do this in more than one way, so let’s start with the first method. Now remember Euler’s formula:

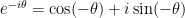

But what happens if we replace  with

with  ?

?

Ideally we want to get rid of the negative sign inside the cosine and sine functions. So let’s think about what happens when we take the cosine of an angle  and the cosine of the angle

and the cosine of the angle  . Well the cosine doesn’t change right! We can also use the fact that cosine is an even function, so

. Well the cosine doesn’t change right! We can also use the fact that cosine is an even function, so

Now we need to know what happens to the sine of an angle  when we take the sine of

when we take the sine of  . We can use the fact that sine is an odd function so

. We can use the fact that sine is an odd function so

So the sine becomes negative when we take the negative angle. We can now substitute these value in in our equation to replace all (well except one) the  with

with  :

:

Combining this equation with the Euler’s original formula:

Notice that if we add both of these together, the sines will “cancel out” and we can solve for cosine. After adding them, we have

Solving for cosine:

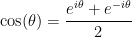

And we have the complex exponential representation of cosine! Now let’s try to solve for sine by subtracting one of the equations from another:

After subtracting, we have one equation:

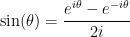

Solving for sine:

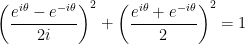

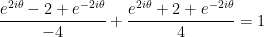

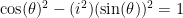

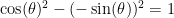

Alright, so now that we have equations for both sine and cosine, we can try to square them and add them together to make sure they they do indeed add to 1:

And just like magic—or just like math—we are left with

And we have confirmed the pythagorean identity. There is however another way, and it’s much simpler and shorter. Recall Euler’s formula:

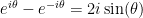

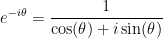

Now let’s take the reciprocal of both sides:

We can also rewrite this as

But we also know that

Using this, we can set both of the right-hand-sides equal to each other:

And if we eliminate the fraction by multiplying both sides by the reciprocal of the right-hand-side,

Now the mathematician inside you should recognize the left-hand-side as a difference of square:

Voilà!

. Et si vous vous demandez pourquoi j’ai fait ça, c’est parce qu’il est possible de développer en série cette expression:

, on va laisser la série commencer à

:

, et donc

,